From Polygons to Photorealism: The Evolution—and Plateau—of 3D Game Graphics

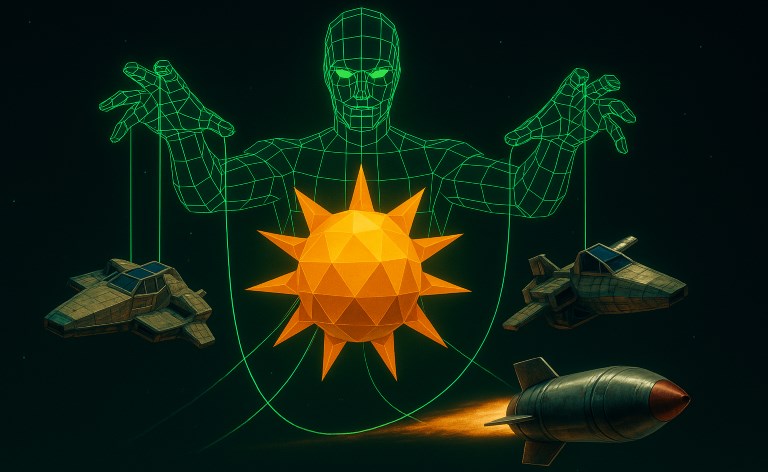

The Era of Clever Constraints

In the early days of 3D gaming, hardware limitations forced developers to be clever. The visuals that defined an era—whether low-poly models, billboarding sprites, or pre-rendered backdrops—weren't just stylistic choices, but practical necessities. Every polygon had a cost. Every lighting trick was a compromise. But out of those limitations grew a golden age of innovation.

Turning Limitations Into Innovation

Take a look at games like Quake, Tomb Raider, or Final Fantasy VII. Each used the best of what the hardware of the time could handle, and did so in strikingly different ways. Quake leaned into full 3D environments with software-based lighting and gritty realism. Tomb Raider featured angular characters and blocky worlds that became iconic not despite their limitations, but because of them. Final Fantasy VII sidestepped real-time rendering entirely for much of its world, instead presenting lush pre-rendered scenes and letting players move 3D models across them. These games didn’t just work around limitations—they turned them into defining characteristics.

Leaps in Generational Power

With each new generation of graphics cards and consoles, we saw significant leaps. The move from software to hardware acceleration, the introduction of hardware T&L (Transform and Lighting), then programmable shaders—each of these brought clear visual benefits. By the early 2000s, games like Half-Life 2 and Doom 3 pushed lighting, animation, and physics into new territory. Visuals didn't just get better—they evolved.

The Plateau of Realism

But something changed in the last decade. As we neared photorealism, the rate of visual evolution began to slow. High-fidelity rendering techniques—global illumination, subsurface scattering, ray tracing—deliver spectacular results, but they come with steep performance costs. The problem is, these improvements aren't always obvious to the average player. When a scene already looks real, doubling the polygon count or pushing texture resolution to 8K yields diminishing returns. What once felt like huge jumps between generations now feels more like refinements.

Style Over Specs

Games like The Last of Us Part II, Red Dead Redemption 2, and Cyberpunk 2077 have reached a level where stylization and direction matter more than sheer rendering muscle. Once you're able to render a believable scene, it becomes less about adding detail and more about how you use the tools. It’s a creative turning point: technology is no longer the main limiting factor. Imagination is.

A Full-Circle Moment

Interestingly, this full-circle moment mirrors the past. We're seeing renewed interest in stylized graphics, from indie darlings like Hades and Tunic to AAA experiments like Hi-Fi Rush. Developers are embracing non-photorealistic styles not because they have to, but because they can. Limitations no longer dictate style—they inform it. And in doing so, we're seeing a broader range of visual expression than ever before.

Looking Ahead

As 3D hardware continues to improve, we may well see new breakthroughs. But the era of obvious, generational leaps in visuals is behind us. The future lies not in chasing realism, but in harnessing the freedom to create something uniquely beautiful, meaningful, and memorable.

🎮 Join the Discussion

What do you think—have we reached the peak of 3D visual evolution, or is there still another revolution waiting to happen?

Share your thoughts in the comments below or connect with me on X.com

If you enjoyed this article, consider subscribing or checking out some of my other posts on the evolution of game development and technology.